ChEESE participated in the virtual EGU General Assembly (vEGU2021), held on 19-30 April 2021, with 14 vPICO presentations held in the second week of the event.

Nine of the presentations were part of the ChEESE-organised session titled “Towards Exascale Supercomputing in Solid Earth Geoscience and Geohazards", which took place on 29 April at 11:00 am - 11:45 am. This session, convened by ChEESE researchers Arnau Folch (BSC), Steven Gibbons (NGI), Marisol Monterrubio-Velasco (BSC), Jean-Pierre Vilotte (IPGP) and Sara Aniko Wirp (LMU), included the following talks about ChEESE research:

Leonardo Mingari (BSC) - "Ensemble-based data assimilation of volcanic aerosols using FALL3D+PDAF"

Beatriz Martínez Montesinos (INGV) - "Probabilistic Tephra Hazard Assessment of Campi Flegrei, Italy"

Otilio Rojas (BSC) - "Towards physics-based PSHA using CyberShake in the South Iceland Seismic Zone"

Nathanael Schaeffer (IPGP/UGA) - "Efficient spherical harmonic transforms on GPU and its use in planetary core dynamics simulations"

Natalia Poiata (IPGP) - "Data-streaming workflow for seismic source location with PyCOMPSs parallel computational framework"

Federico Brogi (INGV) - "Optimization strategies for efficient high-resolution volcanic plume simulations with OpenFOAM"

Marta Pienkowska (ETH) - "Deterministic modelling of seismic waves in the Urgent Computing context: progress towards a short-term assessment of seismic hazard"

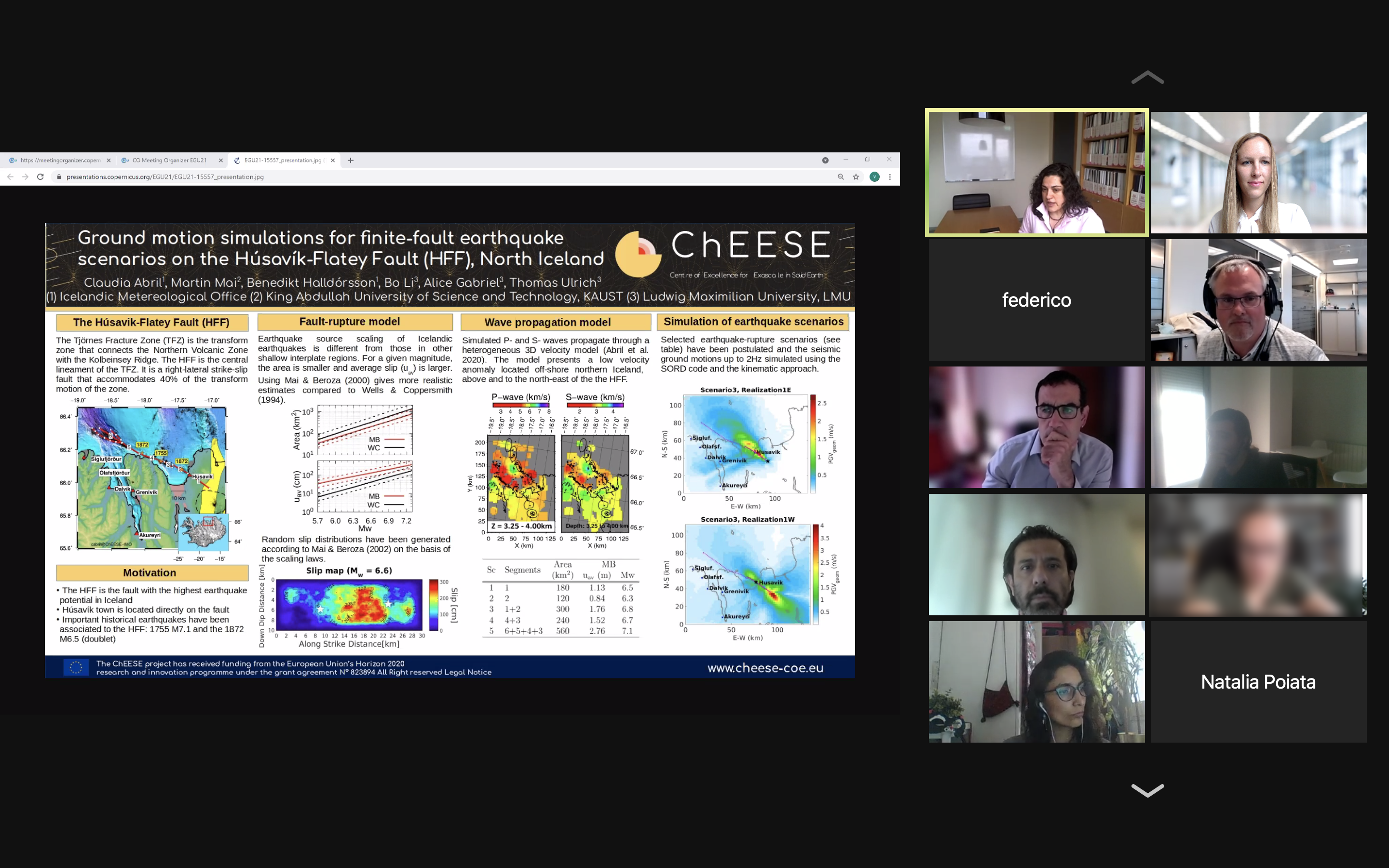

Claudia Abril (IMO) - "Ground motion simulations for finite-fault earthquake scenarios on the Húsavík-Flatey Fault, North Iceland"

Eduardo César Cabrera Flores (BSC) - "A hybrid system for the near real-time modeling and visualization of extreme magnitude earthquakes"

Besides presenting the in session organised by the project, ChEESE researchers also participated in other vEGU21 sessions. Their talks include:

Sara Aniko Wirp (LMU) - "3D linked megathrust, dynamic rupture and tsunami propagation and inundation modeling: Dynamic effects of supershear and tsunami earthquakes" (Tsunamis : from source processes to coastal hazard and warning session)

Steven Gibbons (NGI) - "The Sensitivity of Tsunami run-up to Earthquake Source Parameters and Manning Friction Coefficient in High-Resolution Inundation Simulations" ( Tsunamis: from source processes to coastal hazard and warning session)

Marisol Monterrubio-Velasco (BSC) - "Source Parameter Sensitivity of Earthquake Simulations assisted by Machine Learning" (Advances in Earthquake Forecasting and Model Testing session)

Manuel Titos (IMO) - "Assessing potential impacts on the air traffic routes due to an ash-producing eruption on Jan Mayen Island (Norway)" ( Volcano hazard modelling session)

Fabian Kutschera (LMU) - "Linking dynamic earthquake rupture to tsunami modeling for the Húsavík-Flatey transform fault system in North Iceland" (Linking active faults and the earthquake cycle to Seismic Hazard Assessment: Onshore and Offshore Perspectives session)

In addition to all the ChEESE vPICO presentations, project partner Alice-Agnes Gabriel (LMU) also co-organised the "Physics-based earthquake modeling and engineering" session on 26 April 2021.